Global Forum For Indurstrial Devlopment is SPONSORED BY ICO INDIA

- MP Society Registration (Act. 1973 No. 44) 03/27/01/21857/19 (MSME Forum Established Since-2009)

Supercomputer, any of a class of extremely powerful computers. The term is commonly applied to the fastest high-performance systems available at any given time. Such computers have been used primarily for scientific and engineering work requiring exceedingly high-speed computations. Common applications for supercomputers include testing mathematical models for complex physical phenomena or designs, such as climate and weather, evolution of the cosmos, nuclear weapons and reactors, new chemical compounds (especially for pharmaceutical purposes), and cryptology. As the cost of supercomputing declined in the 1990s, more businesses began to use supercomputers for market research and other business-related models.

Supercomputers have certain distinguishing features. Unlike conventional computers, they usually have more than one CPU (central processing unit), which contains circuits for interpreting program instructions and executing arithmetic and logic operations in proper sequence. The use of several CPUs to achieve high computational rates is necessitated by the physical limits of circuit technology. Electronic signals cannot travel faster than the speed of light, which thus constitutes a fundamental speed limit for signal transmission and circuit switching. This limit has almost been reached, owing to miniaturization of circuit components, dramatic reduction in the length of wires connecting circuit boards, and innovation in cooling techniques (e.g., in various supercomputer systems, processor and memory circuits are immersed in a cryogenic fluid to achieve the low temperatures at which they operate fastest). Rapid retrieval of stored data and instructions is required to support the extremely high computational speed of CPUs. Therefore, most supercomputers have a very large storage capacity, as well as a very fast input/output capability.

Still another distinguishing characteristic of supercomputers is their use of vector arithmetic—i.e., they are able to operate on pairs of lists of numbers rather than on mere pairs of numbers. For example, a typical supercomputer can multiply a list of hourly wage rates for a group of factory workers by a list of hours worked by members of that group to produce a list of dollars earned by each worker in roughly the same time that it takes a regular computer to calculate the amount earned by just one worker.

Supercomputers were originally used in applications related to national security, including nuclear weapons design and cryptography. Today they are also routinely employed by the aerospace, petroleum, and automotive industries. In addition, supercomputers have found wide application in areas involving engineering or scientific research, as, for example, in studies of the structure of subatomic particles and of the origin and nature of the universe. Supercomputers have become an indispensable tool in weather forecasting: predictions are now based on numerical models. As the cost of supercomputers declined, their use spread to the world of online gaming. In particular, the 5th through 10th fastest Chinese supercomputers in 2007 were owned by a company with online rights in China to the electronic game World of Warcraft, which sometimes had more than a million people playing together in the same gaming world.

Although early supercomputers were built by various companies, one individual, Seymour Cray, really defined the product almost from the start. Cray joined a computer company called Engineering Research Associates (ERA) in 1951. When ERA was taken over by Remington Rand, Inc. (which later merged with other companies to become Unisys Corporation), Cray left with ERA’s founder, William Norris, to start Control Data Corporation (CDC) in 1957. By that time Remington Rand’s UNIVAC line of computers and IBM had divided up most of the market for business computers, and, rather than challenge their extensive sales and support structures, CDC sought to capture the small but lucrative market for fast scientific computers. The Cray-designed CDC 1604 was one of the first computers to replace vacuum tubes with transistors and was quite popular in scientific laboratories. IBM responded by building its own scientific computer, the IBM 7030—commonly known as Stretch—in 1961. However, IBM, which had been slow to adopt the transistor, found few purchasers for its tube-transistor hybrid, regardless of its speed, and temporarily withdrew from the supercomputer field after a staggering loss, for the time, of $20 million. In 1964 Cray’s CDC 6600 replaced Stretch as the fastest computer on Earth; it could execute three million floating-point operations per second (FLOPS), and the term supercomputer was soon coined to describe it.

Cray left CDC to start Cray Research, Inc., in 1972 and moved on again in 1989 to form Cray Computer Corporation. Each time he moved on, his former company continued producing supercomputers based on his designs.

Cray was deeply involved in every aspect of creating the computers that his companies built. In particular, he was a genius at the dense packaging of the electronic components that make up a computer. By clever design he cut the distances signals had to travel, thereby speeding up the machines. He always strove to create the fastest possible computer for the scientific market, always programmed in the scientific programming language of choice (FORTRAN), and always optimized the machines for demanding scientific applications—e.g., differential equations, matrix manipulations, fluid dynamics, seismic analysis, and linear programming.

Among Cray’s pioneering achievements was the Cray-1, introduced in 1976, which was the first successful implementation of vector processing (meaning, as discussed above, it could operate on pairs of lists of numbers rather than on mere pairs of numbers). Cray was also one of the pioneers of dividing complex computations among multiple processors, a design known as “multiprocessing.” One of the first machines to use multiprocessing was the Cray X-MP, introduced in 1982, which linked two Cray-1 computers in parallel to triple their individual performance. In 1985 the Cray-2, a four-processor computer, became the first machine to exceed one billion FLOPS.

While Cray used expensive state-of-the-art custom processors and liquid immersion cooling systems to achieve his speed records, a revolutionary new approach was about to emerge. W. Daniel Hillis, a graduate student at the Massachusetts Institute of Technology, had a remarkable new idea about how to overcome the bottleneck imposed by having the CPU direct the computations between all the processors. Hillis saw that he could eliminate the bottleneck by eliminating the all-controlling CPU in favour of decentralized, or distributed, controls. In 1983 Hillis cofounded the Thinking Machines Corporation to design, build, and market such multiprocessor computers. In 1985 the first of his Connection Machines, the CM-1 (quickly replaced by its more commercial successor, the CM-2), was introduced. The CM-1 utilized an astonishing 65,536 inexpensive one-bit processors, grouped 16 to a chip (for a total of 4,096 chips), to achieve several billion FLOPS for some calculations—roughly comparable to Cray’s fastest supercomputer.

Hillis had originally been inspired by the way that the brain uses a complex network of simple neurons (a neural network) to achieve high-level computations. In fact, an early goal of these machines involved solving a problem in artificial intelligence, face-pattern recognition. By assigning each pixel of a picture to a separate processor, Hillis spread the computational load, but this introduced the problem of communication between the processors. The network topology that he developed to facilitate processor communication was a 12-dimensional “hypercube”—i.e., each chip was directly linked to 12 other chips. These machines quickly became known as massively parallel computers. Besides opening the way for new multiprocessor architectures, Hillis’s machines showed how common, or commodity, processors could be used to achieve supercomputer results.

Another common artificial intelligence application for multiprocessing was chess. For instance, in 1988 HiTech, built at Carnegie Mellon University, Pittsburgh, Pa., used 64 custom processors (one for each square on the chessboard) to become the first computer to defeat a grandmaster in a match. In February 1996 IBM’s Deep Blue, using 192 custom-enhanced RS/6000 processors, was the first computer to defeat a world champion, Garry Kasparov, in a “slow” game. It was then assigned to predict the weather in Atlanta, Ga., during the 1996 Summer Olympic Games. Its successor (now with 256 custom chess processors) defeated Kasparov in a six-game return match in May 1997.

As always, however, the principal application for supercomputing was military. With the signing of the Comprehensive Test Ban Treaty by the United States in 1996, the need for an alternative certification program for the country’s aging nuclear stockpile led the Department of Energy to fund the Accelerated Strategic Computing Initiative (ASCI). The goal of the project was to achieve by 2004 a computer capable of simulating nuclear tests—a feat requiring a machine capable of executing 100 trillion FLOPS (100 TFLOPS; the fastest extant computer at the time was the Cray T3E, capable of 150 billion FLOPS). ASCI Red, built at Sandia National Laboratories in Albuquerque, N.M., with the Intel Corporation, was the first to achieve 1 TFLOPS. Using 9,072 standard Pentium Pro processors, it reached 1.8 TFLOPS in December 1996 and was fully operational by June 1997.

While the massively multiprocessing approach prevailed in the United States, in Japan the NEC Corporation returned to the older approach of custom designing the computer chip—for its Earth Simulator, which surprised many computer scientists by debuting in first place on the industry’s TOP500 supercomputer speed list in 2002. It did not hold this position for long, however, as in 2004 a prototype of IBM’s Blue Gene/L, with 8,192 processing nodes, reached a speed of about 36 TFLOPS, just exceeding the speed of the Earth Simulator. Following two doublings in the number of its processors, the ASCI Blue Gene/L, installed in 2005 at Sandia National Laboratories in Livermore, Calif., became the first machine to pass the coveted 100 TFLOPS mark, with a speed of about 135 TFLOPS. Other Blue Gene/L machines, with similar architectures, held many of the top spots on successive TOP500 lists. With regular improvements, the ASCI Blue Gene/L reached a speed in excess of 500 TFLOPS in 2007. These IBM supercomputers are also noteworthy for the choice of operating system, Linux, and IBM’s support for the development of open source applications.

The first computer to exceed 1,000 TFLOPS, or 1 petaflop, was built by IBM in 2008. Known as Roadrunner, for New Mexico’s state bird, the machine was first tested at IBM’s facilities in New York, where it achieved the milestone, prior to being disassembled for shipment to the Los Alamos National Laboratory in New Mexico. The test version employed 6,948 dual-core Opteron microchips from Advanced Micro Devices (AMD) and 12,960 of IBM’s Cell Broadband Engines (first developed for use in the Sony Computer Entertainment PlayStation 3 video system). The Cell processor was designed especially for handling the intensive mathematical calculations needed to handle the virtual reality simulation engines in electronic games—a process quite analogous to the calculations needed by scientific researchers running their mathematical models.

Such progress in computing placed researchers on or past the verge of being able, for the first time, to do computer simulations based on first-principle physics—not merely simplified models. This in turn raised prospects for breakthroughs in such areas as meteorology and global climate analysis, pharmaceutical and medical design, new materials, and aerospace engineering. The greatest impediment for realizing the full potential of supercomputers remains the immense effort required to write programs in such a way that different aspects of a problem can be operated on simultaneously by as many different processors as possible. Even managing this in the case of less than a dozen processors, as are commonly used in modern personal computers, has resisted any simple solution, though IBM’s open source initiative, with support from various academic and corporate partners, made progress in the 1990s and 2000s.

According to a quote with several origins, science advances on the shoulders of giants. In our time, these words have taken on a special meaning thanks to a new class of giants—supercomputers—which nowadays are pushing the boundaries of science to levels that the human intellect would be incapable of reaching on its own.

In a few decades, the strength of these giants has multiplied dramatically: in 1985 the world’s most powerful supercomputer, Cray-2, could process 1.9 billion floating point operations per second (FLOPS), or 1.9 gigaflops, the parameter used to measure the power of these machines. By comparison, a current PlayStation 4 game console reaches 1.84 teraflops, almost a thousand times more. Today, there are at least 500 supercomputers in the world that can exceed a petaflop, or one billion flops, according to the TOP500 list drawn up by experts from the Lawrence Livermore National Laboratory and the universities of Mannheim (Germany) and Tennessee (USA).

Below we present what are currently the ten most powerful supercomputers in the world and some of their contributions to knowledge.

The world’s most powerful supercomputer today is Summit, built by IBM for the U.S. Department of Energy’s Oak Ridge National Laboratory in Tennessee. It occupies the equivalent of two basketball courts and achieves an impressive 148.6 petaflops thanks to its 2.41 million cores.

In addition to its large capacity, Summit is also the most energy-efficient machine in the top 10 of the world’s supercomputers. Its mission is civil scientific research, and since it came into operation in 2018 it has already participated in projects such as the search for genetic variants in the population related to diseases, the simulation of earthquakes in urban environments, the study of extreme climatic phenomena, the study of materials on an atomic scale and the explosion of supernovae, among others.

IBM is also responsible for the second most powerful supercomputer on the list, Sierra, located in California’s Lawrence Livermore National Laboratory. Based on Summit-like hardware, Sierra manages 94.6 petaflops.

Unlike its older brother, Sierra is dedicated to military research, more specifically to the simulation of nuclear weapons in place of underground tests, so its studies are classified material.

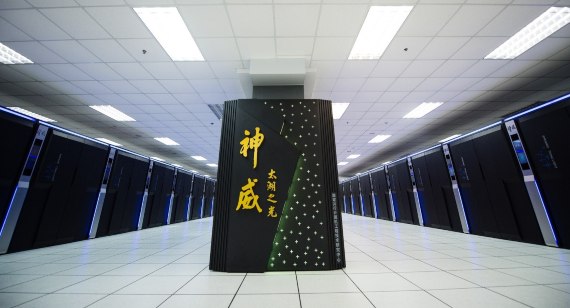

Until Summit and Sierra came into service in 2018, China was at the forefront of global supercomputing with TaihuLight, a machine built by the National Centre for Engineering Research and Parallel Computing Technology and installed at the National Supercomputing Centre in Wuxi. Unlike other machines of its calibre, it lacks accelerator chips, so its 93 petaflops depend on its more than 10 million Chinese Sunway processors.

TaihuLight is in a way a product of the trade war between China and the US, since its construction has completely dispensed with US technology, in response to the restrictions imposed by the US. This supercomputer has participated in research such as the simulation of the birth and expansion of the universe using 10 billion digital particles.

China also retains fourth place in the ranking with Tianhe-2A, or Milky Way 2A, developed by the National University of Defence Technology and equipped with Intel Xeon processors that allow it to reach 61.4 petaflops. According to its operators, the machine is use for computing related to government security, among others.

The Advanced Computing Center at the University of Texas at Austin has entered the top 10 in global supercomputing thanks to Frontera, a new system built by Dell and equipped by Intel. Frontera was unveiled to the world in September 2019 as the world’s fastest supercomputer located in a university. Since June, it has been collaborating with three dozen scientific teams in research related to the physics of black holes, quantum mechanics, drug design and climate models. Its 23.5 petaflops will be available to the scientific community, which will benefit from its computational capacity especially in the areas of astrophysics, materials science, energy, genomics and the modelling of natural disasters.

Europe’s most powerful system ranks sixth on the list. Piz Daint is a supercomputer named after an alpine mountain—whose image is displayed on its housing—located at the Swiss National Supercomputing Centre in Lugano. It is an upgrade of a system built by the American company Cray, founded by the father of supercomputing Seymour Cray and responsible for several of the world’s most powerful machines. Its Intel and NVIDIA processors give it a speed of 21.2 petaflops. Piz Daint is involved in extensive research in materials science, physics, geophysics, life sciences, climatology and data science.

Also a product of the Cray company is Trinity, the Los Alamos National Laboratory and Sandia National Laboratory system that is able to reach nearly 20.2 petaflops. This machine, which inherited its name from the first U.S. nuclear test in 1945, is mainly devoted to nuclear weapons-related calculations.

The 19.9 petaflops of ABCI, a system built by Fujitsu and belonging to Japan’s National Institute of Advanced Industrial Science and Technology, place this machine in eighth place in the ranking. One of its most striking features is its energy efficiency, a parameter in which it scores just below Summit. ABCI’s goal is to serve as a cloud-based Artificial Intelligence resource available to Japanese companies and research groups.

In 2018, the new generation SuperMUC supercomputer officially came into service at the Leibniz Supercomputing Centre in Garching, near Munich (Germany). Built by Lenovo with technology from the company and Intel, the most powerful supercomputer in the European Union achieves a processing speed of 19.5 petaflops.

The top 10 closes with Lassen, Sierra’s little brother at Lawrence Livermore National Laboratory, built by IBM with the same architecture. Its recent improvements have increased its speed to 18.2 petaflops. Unlike its brother, Lassen is dedicated to unclassified research.